Introduction

Imagine you’re on a construction site, surrounded by noise, dust, and a maze of materials. You need a robotic arm to install a drywall panel, but shouting over the din is hopeless, and typing commands is too slow. What if you could just point at the panel, say “put that here,” and have the robot understand you perfectly? That’s no longer science fiction, it’s happening now, thanks to groundbreaking research that merges Virtual Reality (VR), AI, and robotics.

In a 2024 study titled “Integrating Large Language Models with Multimodal Virtual Reality Interfaces to Support Collaborative Human-Robot Construction Work,” researchers Somin Park, Carol C. Menassa, and Vineet R. Kamat introduced a system that lets construction workers control robots using a combination of voice commands and hand gestures in a VR environment. The results? Less mental strain, fewer errors, and a more natural way of working together with machines.

This blog breaks down their innovative system in simple terms, explains why it matters, and imagines how it might change the face of construction and beyond.

Why Do We Need Robots in Construction?

Construction is an industry that presents a unique set of challenges. It demands not only physical strength and endurance but also requires workers to navigate a range of hazards and unpredictable conditions daily. Human workers contribute invaluable skills, such as expertise gained from experience, the ability to adapt to changing circumstances, and effective problem-solving capabilities. Yet, despite these strengths, they remain vulnerable to a variety of risks, including the potential for injury, the effects of fatigue, and the inevitability of human error, which can significantly impact safety and productivity on site.

In contrast, robots bring a different set of advantages to the construction field. They offer unmatched precision in tasks such as drilling and cutting, possess substantial strength for heavy lifting, and have the capacity to perform repetitive tasks without experiencing tiredness. This means that, in many scenarios, robots can work longer hours and maintain consistent performance levels without the physical limitations that human workers encounter.

The most promising approach to enhancing the construction process is through Human-Robot Collaboration (HRC). This model envisions an environment where humans and robots operate alongside one another, leveraging the unique strengths of both to achieve superior results. In this collaborative framework, humans could focus on the complex, creative, and adaptive tasks that require critical thinking and decision-making, while robots handle responsibilities that require precision or repetitive effort.

However, implementing HRC raises a significant challenge: establishing an effective means of communication between humans and robots. For collaboration to be successful, the interaction must be fast, easily understandable, and intuitively designed. This involves not only the development of sophisticated algorithms and user interfaces but also ensuring that both parties can interpret and respond to signals from one another in real-time, thereby creating a seamless workflow that enhances productivity and maintains safety on construction sites.

The Communication Problem

In the fast-paced environment of a construction site, verbal communication stands out as the most intuitive and efficient method for relaying instructions. However, the setting presents several challenges that can hinder effective communication. Factors such as surrounding background noise from machinery and tools, the variety of heavy accents among workers, and the use of specialized jargon unique to the construction industry can create significant barriers, even for advanced speech recognition systems.

For instance, a detailed instruction like, “install the 4×8 drywall panel on stud 606,” is clear and precise but can easily become problematic. If the speech recognition technology mistakenly interprets “606” as “605,” the consequences could lead to costly errors in the construction process, resulting in wasted materials, time delays, and potentially compromising the integrity of the project.

Historically, efforts to enhance robot interaction in these high-demand settings have typically focused on singular methods of input, relying solely on voice commands or gestures. Each of these approaches carries its own set of limitations: speech input can be misinterpreted due to noise or accents, while gesture controls may lack precision or clarity in communication.

This raises an intriguing question: what if we could innovate new ways to combine these modes of interaction? By integrating both speech and gesture inputs, we could create a more robust and reliable system for interacting with robots on construction sites, significantly reducing the likelihood of misunderstandings and improving overall efficiency.

The Solution: A Multimodal VR Interface

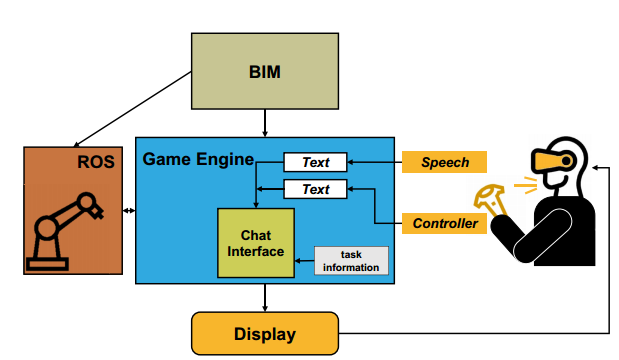

The research team built a system that integrates:

- Voice commands (speech)

- Hand controller inputs in VR (gestures)

- A Large Language Model (GPT-4) as a “translator” and assistant

- Building Information Modeling (BIM) for real-time data on materials

- A Robot Operating System (ROS) to control the robot

- A Unity-based VR environment to visualize everything

Here’s how it works, step by step:

Step 1: You Put on a VR Headset

Instead of standing in a noisy, dangerous construction site, you enter a virtual replica of it. You see the same studs, drywall panels, tools, and even a virtual robot arm. Everything is modelled from real BIM data, so it’s accurate down to the millimeter.

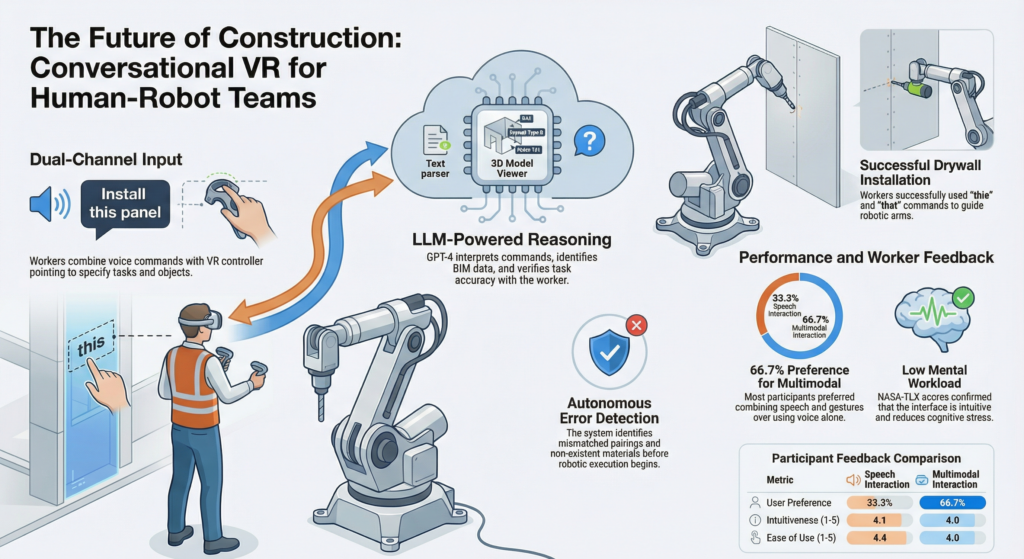

Step 2: You Speak and Point

To give an instruction, you simply point at an object using a handheld VR controller (like a laser pointer) and speak a natural command. For example:

- You point at a drywall panel and say, “Pick this up.”

- You point at a stud and say, “Place it here.”

The system captures both inputs:

- Your speech is converted to text using Whisper, an AI speech recognition tool.

- Your controller selects the object and pulls its unique ID from the BIM database.

These two pieces of information are combined into a single instruction, like:

“Pick this up. The ID of the target object is 504.”

Step 3: The AI Double-Checks

Before the robot does anything, the instruction is sent to GPT-4, which acts as a virtual assistant. GPT-4 reads the command, understands the intent, and then asks you for confirmation.

For example, it might reply:

“I understand you want to pick up Panel 504 and install it on Stud 606. Is that correct?”

This bidirectional communication is key. If the AI misunderstood, or if you gave a wrong instruction, you can correct it before anything happens in the real world.

Step 4: The Robot Acts

Once you approve, the instruction is sent to the robot via ROS. The robot plans its motion, avoids collisions, and performs the task, whether it’s picking up, placing, or moving materials.

Real-World Example: Drywall Installation

In the study, 12 construction workers tested the system on a drywall installation task, a classic “pick-and-place” job that robots are well-suited for.

Without the system:

A worker might have to say something like: “Robot, please pick up the 4×8 drywall panel labeled 503 and install it on the second stud from the left, but make sure it’s aligned vertically and flush with the top plate.”

That’s detailed, tedious, and prone to miscommunication.

With the system:

The worker points at the panel, points at the stud, and says: “Put this here.”

The system fills in the details automatically using BIM data. Commands were 30% shorter on average, and workers reported less mental effort.

What Did the Workers Think?

The research team didn’t just build the system, they tested it with real construction professionals. Here’s what they found:

- Lower Workload: Using NASA’s Task Load Index (a standard tool for measuring mental and physical demand), workers reported low overall workload with the multimodal system. Speech-only interaction was slightly more mentally demanding, likely because workers had to remember and verbalize precise IDs and locations.

- High Intuitiveness and Ease of Use: On a scale of 1–5, both speech and multimodal methods scored above 4 for intuitiveness and ease of use. But two-thirds of workers preferred the multimodal system because it felt faster, more accurate, and more engaging.

- Fewer Errors: Workers were asked to intentionally give wrong commands to test the AI’s error detection. GPT-4 caught 7% of errors, including: Mismatched materials (e.g., trying to place a large panel where only a small one fits), references to nonexistent parts and instructions for already installed components.

When it missed an error, the system still asked for confirmation, giving the human a final chance to

Why This Matters Beyond the Lab

The implications of this system extend far beyond just drywall installation or construction processes. It signifies a revolutionary shift in human-machine collaboration, with the potential to transform various industries. Here’s an in-depth look at where this technology could lead us:

In Construction:

- Remote Operation: Imagine a scenario where construction workers can operate robots from a comfortable, secure office located miles away from a hazardous construction site. This setup not only enhances safety by minimizing the risk of injury from dangerous tasks but also allows for greater efficiency as workers can oversee multiple sites simultaneously without the physical demands of on-site presence.

- Training Opportunities: New employees entering the construction industry can benefit immensely from virtual reality (VR) training modules. These immersive experiences allow trainees to practice intricate tasks and familiarize themselves with equipment and materials in a controlled, virtual environment before they engage in real-world applications. This hands-on simulation helps build competence and confidence, potentially reducing error rates on actual job sites.

- Addressing an Aging Workforce: As the skilled workforce begins to retire, there is a pressing need to capture and preserve their invaluable knowledge. AI-assisted robots can step in to fill this gap by complementing the expertise of older workers, allowing them to share their insights in real-time through enhanced training tools. This not only helps to maintain productivity levels but also ensures that critical skills are passed on to newer generations.

In Other Industries:

- Manufacturing: In the manufacturing sector, workers can operate sophisticated robotic systems using intuitive gestures and verbal commands. This level of interaction streamlines the production process, allowing workers to focus on quality control and problem-solving while robots handle routine tasks. Enhanced ergonomics through such systems could also lead to reduced physical strain and injury among workers.

- Healthcare: The integration of VR and AI technology in healthcare stands to revolutionize surgical procedures. Surgeons could utilize advanced robotic assistants, controlled through immersive VR interfaces, to perform complex operations with increased precision. This collaboration not only improves surgical outcomes but also allows for real-time adjustments based on the surgeon’s insights during the procedure.

- Disaster Response: In the field of disaster response, teams could deploy robots to navigate precarious and hazardous environments, such as earthquake-damaged structures or toxic areas, all while operating from a safe distance. Using intuitive interfaces, rescue workers could remotely control these robots to search for survivors, deliver supplies, or assess danger levels, significantly enhancing response times and overall safety.

This new paradigm of human-machine collaboration has the potential to transform not just individual industries but also the broader landscape of work, enabling safer, more efficient, and innovative approaches to tackling complex challenges.

Conclusion

In conclusion, while the study demonstrates promising advancements in technology that enhance collaboration between humans and machines, it also highlights some important limitations and areas for improvement. The focus on a single, simple task and the lack of diversity among participants suggest that further research is needed to broaden the scope of applications and inclusivity. Automating data entry and improving visual presentation in complex environments could enhance usability and effectiveness. Ultimately, this research emphasizes the need for technology to adapt to human communication methods, employing intuitive interactions such as speech and gestures. The goal is to foster a collaborative work environment where humans and robots work side by side, minimizing errors and cognitive load while enhancing engagement—creating a future where we build a better world together, panel by panel.

Reference

- Park, S., Menassa, C. C., & Kamat, V. R. (2025). Integrating large language models with multimodal virtual reality interfaces to support collaborative human–robot construction work. Journal of Computing in Civil Engineering, 39(1), 04024053.https://doi.org/10.1061/JCCEE5.CPENG-6106