Imagine a world where inspecting a bridge is as simple as taking a few photos with your smartphone. No risky climbs, no traffic disruptions, and no weeks of manual analysis. Thanks to advancements in AI and computer vision, this future is closer than you think. But there’s a catch: training these AI systems requires massive amounts of labelled data—something that’s scarce in the world of infrastructure. Enter synthetic data, the unsung hero making this vision a reality.

In this article, we’ll explore groundbreaking research from a team of scientists who developed PFBridge, a synthetic dataset designed to automate the inspection of portal frame bridges. We’ll break down how they built realistic 3D bridge models, trained AI systems to recognize structural components, and even integrated their work into a mobile app for real-world use.

Bridges are the backbone of modern infrastructure, but they’re also ageing rapidly. In the U.S. alone, over 42% of bridges are at least 50 years old, and many require urgent repairs. Traditional inspections involve engineers scaling scaffolds, dodging traffic, and manually documenting cracks, rust, and wear—a process that’s time-consuming, expensive, and dangerous.

While AI-powered tools could automate much of this work, they face a major hurdle such as training data. To teach an AI model to recognize bridge components like decks, abutments, or wing walls, you need thousands of labelled images. However, manually annotating these images is tedious, and real-world bridge photos are hard to collect consistently.

This is where synthetic data excels. By generating artificial yet realistic images of bridges, researchers can develop extensive and diverse datasets to train robust AI models—without depending solely on limited real-world data. The team behind PFBridge tackled this challenge head-on by creating a parametric 3D model of portal frame bridges—a common bridge type known for its monolithic concrete structure. Here’s how they did it:

Step 1: Designing a Parametric 3D Model

A parametric model can be likened to a digital Lego set specifically designed for bridges, where each component—such as the deck, abutment, and wing walls—is defined by a set of mathematical rules and constraints. For instance, the dimensions of the deck must correspond with the lengths and widths of the abutments, ensuring a proper fit. Additionally, there are rules governing height ratios, as wing walls cannot exceed the height of the abutments they connect to. To further replicate real-world structures, some components are slightly tilted, introducing minor imperfections. By utilizing 165 parameters, of which 96 are randomized, the model is capable of generating unique yet structurally sound bridge designs. This approach not only promotes diversity within the dataset but also ensures compliance with essential engineering principles.

Step 2: Adding Realism with Blender

Raw 3D models often resemble props straight out of a video game—so pristine and flawless that they can be easily identified by an AI as artificial. To create images that have a more realistic and believable appearance, the team employed Blender, a powerful tool for 3D rendering, and undertook a series of meticulous steps. They started by applying textures to each concrete surface, intricately detailing them with cracks to suggest wear and tear, stains to evoke a sense of age, and weathering effects to simulate exposure to the elements. This enhancement helped give depth and character to the models, making them appear far less synthetic. Next, the team focused on simulating lighting conditions to enhance realism. By creating dynamic skies, incorporating fog, and adjusting shadows, they were able to replicate a range of weather scenarios, from bright sunny days to overcast, gloomy conditions, which added an atmospheric layer to the images. Furthermore, to achieve a realistic perspective, virtual cameras were thoughtfully positioned as if operated by real inspectors, capturing close-up shots from a human eye level and mirroring the angles one would expect during an actual inspection. The careful combination of these techniques resulted in thousands of synthetic images that convincingly resemble photographs taken by an engineer on-site, showcasing the sophisticated digital craftsmanship that blurs the lines between reality and virtual creation.

Step 3: Automating Annotations

In the real world, it can take a long time to label each individual pixel in an image as either “deck” or “abutment”. However, in a digital environment, this process is much quicker because the labels are applied automatically. In software like Blender, every part of a 3D model comes with its own pre-made label. When you export an image from Blender, it also exports masks that show which parts of the image belong to which category. This automatic labelling helps reduce mistakes that people might make and makes the whole process much easier and faster.

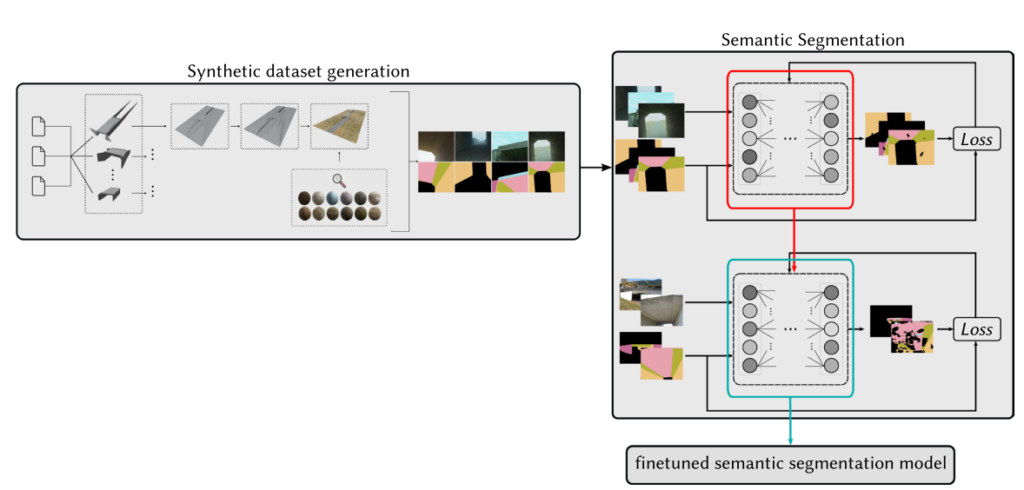

The team embarked on an exciting project to train an AI model using a dataset called PFBridge, which included both synthetic and real images. The process unfolded in two distinct stages, the first of which was a “Synthetic Data Bootcamp”.

In this phase, the team trained a U-Net model, widely recognized for its effectiveness in semantic segmentation (as shown in figure), using 1911 synthetic images. These images, although artificially created, were valuable for teaching the AI how to identify various components of a bridge. For example, the model learned that decks are flat structures that extend the length of the bridge, abutments are the vertical walls that support the deck, and wing walls are angled walls that extend out from the abutments. Impressively, the model achieved a remarkable 95% F1-score on the synthetic test images, indicating its strong ability to recognize these features.

In the second stage, the focus shifted to “Fine-Tuning with Real Data”. After the solid foundation laid during the synthetic training, the model was exposed to just 88 real images. This step was crucial for bridging the gap between the synthetic images and real-world scenarios, helping the AI adapt to variations in lighting, angles, and other nuances not present in the synthetic images. The results were encouraging; the model saw a 28% improvement in its F1-score when compared to training solely on real data. Ultimately, it achieved a 67% macro F1-score when tested with real images, significantly surpassing models trained exclusively with real data, which had only a 39% F1-score. This process demonstrated the effectiveness of combining synthetic and real data for training AI models, leading to better performance in real-world applications.

Synthetic data is gaining popularity in various research fields, and for good reasons. Instead of waiting months to collect more real images, researchers can generate a large number of unique images quickly—sometimes in just a few hours. This approach also gives them greater control, allowing them to create rare scenarios that are tough to capture in reality, like extreme damage to a structure. Plus, it saves money since researchers don’t have to spend on expensive fieldwork or labour-intensive labelling. As one researcher pertinently put it, “Synthetic data is like training wheels for AI,” helping the model learn safely before facing the unpredictable nature of real-world data.

Beyond just using synthetic data, a team has developed an innovative mobile app that uses LiDAR technology, the same found in iPhones, to create 3D point clouds of bridges. The process is straightforward: users scan the bridge by walking around it while the app captures LiDAR data. As they do this, the AI segments video frames and labels parts of the bridge, like the deck or the abutment. These labels are then mapped onto a 3D model, resulting in a digital twin—or a virtual replica—of the bridge. This digital twin is more than just a pretty 3D render; it provides engineers with the tools to measure cracks with incredible precision, simulate stress tests to predict future wear, and automatically update maintenance records. This technology truly enhances how we understand and maintain our infrastructure.

The PFBridge project marks a significant advancement in the field of bridge inspection, addressing some critical challenges while paving the way for future developments. However, the team recognizes certain limitations, such as the inability of current models to handle curved components, as most only accommodate straight edges, even though many bridges feature arches. Additionally, the models lack a variety of textures that could enhance realism, including environmental details like graffiti and vegetation. There is also a pressing need for mobile optimization, as faster inference speeds are essential for conducting real-time inspections. Looking ahead, future work aims to expand the dataset to encompass a wider range of bridge types and integrate augmented reality (AR) tools for on-site inspections.

Overall, PFBridge exemplifies how synthetic data can make AI more accessible in specialized fields, transforming 3D models into valuable training data. This innovation is set to make bridge inspections safer, cheaper, and faster, allowing engineers to focus less on the physical strain of inspections and more on solving practical problems. For the general public, this progress translates into safer roads and a more intelligent approach to infrastructure maintenance. The PFBridge dataset and code are available for researchers worldwide, encouraging collaboration in the effort to modernize infrastructure, which is undoubtedly the strongest bridge of all.

Reference

Fountoukidou, T., Tkachenko, I., Poli, B., & Miguet, S. (2024). Extensible portal frame bridge synthetic dataset for structural semantic segmentation. AI in Civil Engineering, 3(1), 1-18. https://doi.org/10.1007/s43503-024-00041-7

Vardanega, P. J., Webb, G. T., Fidler, P. R. A., Huseynov, F., Kariyawasam, K. K. G. K. D., & Middleton, C. R. (2022). 32-bridge monitoring. In: Innovative bridge design (pp. 893–932). New York: Butterworth-Heinemann.

Hüthwohl, P., Lu, R., & Brilakis, I. (2016). Challenges of bridge maintenance inspection. DOI:10.17863/CAM.26634.

Dang, N., & Shim, C. (2020). Bim-based innovative bridge maintenance system using augmented reality technology. In: CIGOS 2019, Innovation for Sustainable Infrastructure: Proceedings of the 5th International Conference on Geotechnics, Civil Engineering Works and Structures. 1217–1222. Springer.

Kilic, G., & Caner, A. (2021). Augmented reality for bridge condition assessment using advanced non-destructive techniques. Structure and Infrastructure Engineering, 17(7), 977–989.

Ham, Y., Han, K. K., Lin, J. J., & Golparvar-Fard, M. (2016). Visual monitoring of civil infrastructure systems via camera-equipped unmanned aerial vehicles (uavs): A review of related works. Visualization in Engineering, 4(1), 1–8.